Conference on Deaf and Hearing Children in Multilingual Settings

August 10, 2019

University of Ghana, Legon

To become a competent language user, a child needs to learn the linguistic system of a language as well as the social system governing its use. Both systems are subtle and complex, even more so when a child is growing up in a multilingual environment. Families with deaf members are often bimodally bilingual, i.e. using both a sign and a spoken language. This obviously affects the process of language acquisition and socialization. Growing up in highly multilingual environments, with three or more languages, appears to be more common in some parts of the world, including parts of Africa, than is reflected in the current literature on language acquisition and socialization. Like bimodal bilingualism, trilingualism importantly affects the process of language acquisition and socialization.

Over the past two years, the project Language Socialization in Deaf families in Africa, funded by the Leiden University Fund, took place. A corpus was compiled of interactions in families with deaf parents in five countries in Africa, including monomodal (i.e. uniquely signing or uniquely speaking) families and bimodal trilingual families. The children in the corpus are growing up in a variety of family settings, including nuclear, single parent families as well as extended families with a relatively large number of deaf and hearing adults and children living together in one household. This variation enables to study the influence of social setting on the language development of children acquiring a sign language.

On Saturday August 10, 2019, the closing conference of this project will be held at the University of Ghana, Legon. The program will consist of presentations on language acquisition and socialization in deaf and hearing families in Africa, as well as in deaf families worldwide.

Keynote presenter:

Lynn Hou, PhD, Assistant Professor, University of Santa Barbara, The case study of “making hands” in one extended signing family

Presenters:

Erin Wilkinson, University of New Mexico, A study on cross-lexical activation of American Sign Language and English in young deaf signers: Deaf children connect English print words with ASL signs in a monolingual semantic judgement task

Kidane Admasu, Tano Angoua, Evans Namasaka Burichani, Marco Nyarko, Moustapha Magassouba, Lisa van der Mark, Victoria Nyst, Diyedi Sylla, Creating a longitudinal corpus of sign language development of children of deaf parents in Cote d’Ivoire, Ethiopia, Ghana, Kenya, and Mali

Marta Morgado, Leiden University, on language development of deaf children in deaf schools in Portugal

Tano Angoua, Université Houphouët Boigny, TBA

Kidane Admasu, Addis Ababa University, Caregiver’s Sensitivity and Lexical Development Patterns of Hearing Children of Deaf Adults in Ethiopia

Yves Beosso, Mission Chretienne des Sourds au Tchad, Ngambaye home signing : a preliminary analysis of the lexicon of three deaf children and their hearing families in N’Djamena, Chad

Organizing committee:

George Akanlig-Pare, University of Ghana, Dept. of Linguistics

Marco Nyarko, University of Ghana, Dept. of Linguistics

Timothy Mac Hadjah, Leiden University, LU Centre for Linguistics

Victoria Nyst, Leiden University, LU Centre for Linguistics. For more information: v.a.s.nyst@hum.leidenuniv.nl

Abstracts

Keynote

The case study of “making hands” in one extended signing family

Lynn Hou, University of California Santa Barbara

Scattered across the globe, there exist rural signing communities in multilingual areas, in which deaf and hearing children learn to sign. Yet little is known about how they learn from actual usage events, i.e. spontaneous conversations, in these communities. Moreover, little is also known about how this process naturally occurs without critical masses of child peers in an educational institution for the deaf. I address this gap with a case study of an emerging sign language, San Juan Quiahije Chatino Sign Language, or “making hands”. It is a constellation of family sign language varieties that recently originated among eleven deaf people and their families in the San Juan Quiahije

municipality in Oaxaca, Mexico. The primary and dominant language spoken of the municipality is San Juan Quiahije Chatino, and the language of schooling is Mexican Spanish.

In this talk, I give a brief overview of the signing community, showing some similarities and differences in the language ecology of each family. I focus on how language emergence, acquisition, and socialization is most robust in one extended signing family with one deaf first-generation woman signer and two second-generation girl signers, (one deaf and one hearing) due to the particular language ecology of this family. I take an ethnographic and usage-based approach to investigating this family, which involves extensive fieldwork in 2014-2015 and short follow-up trips in 2018 and 2019. What distinguishes this family from other families is the presence of a deaf adult signing model in residence and the age-matched pair of two girls, one deaf and one hearing, and the language, gender, and age socialization of these persons as signers.

Part of the evidence for the language emergence and use is a construction-based analysis of the signers’ usage of directional and non-directional verbs from different samples of spontaneous conversation. Directional verbs, or more commonly, verb agreement morphology, have attracted scholarly attention in recent work on emerging and rural sign languages. They constitute one class of verbs of transfer and motion that “point” to (or encode) their arguments through spatial modification of verb forms. In “making hands”, signers deploy pointing and directional verb constructions for indicating arguments based on real-world space that is closely intertwined with local topography. The first-generation signer’s system treats directional verbs as verb islands and second-generation signers learn them piecemeal, although they do not generalize them to other verbs beyond what is found in the input. These findings demonstrate how one aspect of language development differs in one community from other signing communities documented, as in the case of Nicaraguan Sign Language and Israeli Sign Language. The findings also highlight the role of usage events, along with the benefits of visual input, in the emergence and acquisition of directionality in an emerging, family-based sign language. Finally, the findings are supported by ethnographic observations of how hearing children learn to sign and are socialized as competent signers in particular language ecologies.

Caregiver’s Sensitivity and Lexical Development Patterns of Hearing Children of Deaf Adults in Ethiopia

Kidane Admasu, Leiden University

Deaf family refers to where deaf parents use sign language with their deaf and/or hearing children and where the children are in contact with deaf culture behavior. Like hearing caregivers, deaf caregiver family plays an integral role in promoting the overall functioning of the family unit. Sensitive/responsive caregiving refers to a parenting, caregiving, and an effective teaching practice. The sensitive caregiving may play an important role in promoting in language development of the children. This study focuses on the notion of sensitivity and lexical development of hearing children of deaf adults. It examines the differences in sensitivity scores and its relationship to lexical input outcome experienced in deaf-parented family and hearing child interaction. It was hypothesized, if the score of sensitivity is his high, there will be high lexical input from the caregiver. And, if the score of the sensitivity is low, there will be low lexical input from the caregiver.

The data were collected in Addis Ababa, the capital city, where marriage relationship between deaf couples is abundant. The participants were six deaf families with single or more hearing children. The natural interactions between Deaf parents (primary caregivers) and their children were recorded, with intervals of 8 weeks and 3 months over a time period of ~ 2 years. At the beginning of the project, the children were 1 ½ to 3 years old. The longitudinal recording data were annotated with time-aligned software (ELAN). An observation and interview of deaf parent participants were also conducted. The sensitivity score code provided by adapting of Ainsworth’s sensitivity versus insensitivity observation scale to allow for assessment of sensitivity as received by the child. The lexical input was also analyzed in the form of excel sheet to sort out quantity of lexicon in each round of recorded videos.

The findings indicate that most deaf caregiver’s sensitivity scores runs from very sensitive (8) to quite sensitive (4) scores. Deaf caregivers were responsive/sensitive to their hearing children signals, needs and demands. The findings also indicate that relationship between sensitivity scores and quantity and diversity to lexical input were flexible which is not parallel each other. It showed no correlation between sensitivity scores of each participant and caregiver’s lexical input outcome.

Deaf parents and their hearing children gestures and signs in expression of negation: case of rural and urban deaf families of Côte d’Ivoire

Angoua Tano, Félix Houphouët Boigny University, Cocody, Abidjan (Côte d’Ivoire)

Negation in sign languages is mostly expressed by manual and non-manual marks (Pfau and Quer 2002; Pfau 2015). Headshake is a non-manual negation which is originally the opposite of bowing to somebody symbolizing obedience (Jakobson 1972). Thus, according to Pfau and Steinbach (2013), the movement of the head to express negation in sign languages is a grammaticalized linguistic element from a gestural input. This paper examines the acquisition and expression of negation by children in Bouakako Sign Language (LaSiBo) and the variety of American Sign Language used for deaf education in Côte d’Ivoire (ASL-CI).

LaSiBo is used by seven deaf people in the village of Bouakako (in South West of Côte d’Ivoire) and where considerable number of hearing people use sign supported speech. The deaf signers are not in school, have family ties and are the first generation identified. A descriptive analysis of LaSiBo is presented in Tano (2016). ASL-CI, on the other hand, is mostly used in urban cities by educated deaf people. This language was introduced in Côte d’Ivoire in 1974 by Andrew Foster for deaf education.

This study on negation is an analysis of gestures related to semantics. There is a lack of studies on language socialization in Africa, especially on children with deaf parents. Bilingualism within families and sign language acquisition/development for children born to these families are still understudied; hearing children of deaf parents are even less studied. For children born to deaf parents, a semantic aspect like the expression of negation is interesting to observe. Bloom (1970) suggests three types of negative meanings in the early speech of children learning English. These categories are rejection (negations are those in which “the referent actually existed or was imminent within the contextual space of the speech event and was rejected or opposed by the child,”); denial (the negation “asserted that an actual predication was not the case) and nonexistence (“the referent was not manifest in the context, where there was an expectation of its existence, and was correspondingly negated in the linguistic expression,”) (Bloom, 1970:173) Are the negation markers such as shaking the index finger and the head or shaking the body (trunk)? fit in the categories proposed by Bloom (1970)? As the target families live in different environments, are there any differences that can be observed in the construction of negation? What about the position of the negation particle within a sentence? Is the grammaticalization of the gesture effective in this context? These were some of the questions that motivated this study.

Six young children less than five years from five families were recorded. At least one of the parents is deaf and uses different sign languages. Three families live in a rural area, in the village of Bouakako, where children are mainly exposed to Dida (the surrounding spoken language) and LaSiBo. The remaining two families live in Abidjan, an urban area and the most important town of Côte d’Ivoire, and use ASL-CI. The three parents of rural area are composed by a couple deaf-hearing while those of urban area are deaf-deaf. The children in Abidjan are exposed to French. All target children for this study are hearing so they are developing bilingually and bimodally. The corpus is composed of 30 minutes recordings of interactions between children and their deaf parents through a series of home visits taking place once every six weeks from July 2017 to July 2018 with a total of approximately 840 minutes of recording. Deaf parents were asked to discuss any topic with the target child as they would daily.

Our first results show that in both Bouakako and Abidjan, negation is expressed in the same way by adults and their respective children as well as in the gestures of hearing non-signing people in the community they belong to. Gestures of hearing non-signer have been observed on the field of research during the session of recording. The signs to express negation lie in a continuum of gestures used by the hearing community in general and introduced into sign languages. In most cases, we have a shaking index finger, a shaking head or a shaking body. For the latter, it has been noticed that it is exclusively used by children (Figure 1). The meanings of these signs are mostly in the category of rejection and nonexistence. However, in a family in Abidjan, we also notice the use of an ASL-CI sign ‘NOT’ by both parents and the child because ASL-CI is the main language of communication between them. The target child already has noticeable knowledge of ASL-CI thanks to her age. According to the position of negation particle within a sentence, few words can be says about it as I did not start yet a deep analysis. But we observe that in most cases, the negation appears consecutively in context like a question

Father: YOU SLEEP ‘’ do you want to sleep?’’

Child: NO (headshake)

We can conclude that to express the notion of negation, children use manual and non-manual markers signs similar to the gestures from hearing people. Often shared with the wider hearing community, the manual and the nonmanual marker seem to have derived from co-speech gestures (Wilcox, 2009). The process of grammaticalization (if only one of the two markers (manual or non-manual) is obligatory; spreading of the headshake i.e. the headshake is co-articulated only with the manual negator or extends to neighboring signs) of the negation signs into linguistic negation markers in LaSiBo need to be study deeply.

Figure 1: Side-to-side body movement for negation by child in LaSiBo

References

Bloom, L. (1970). Language development: Form and function in emerging grammars. New York, NY: MIT Research Monograph, No 59.

Jakobson, R. (1972). Motor signs for ‘Yes’ and ‘No’. Language in Society 1, 91-96.

Pfau, R. (2015) A Featural Approach to Sign Language Negation. Negation and Polarity: Experimental Perspectives, pp 45-74.

Pfau, R, and Quer, J. (2002). V-to-Neg raising and negative concord in three sign languages. Rivista di Grammatica Generativa 27: 73–86.

Pfau, R., & Steinbach, M. (2013). Headshakes in Jespersen’s Cycle. Paper presented at 11th Conference on Theoretical Issues in Sign Language Research, July, London.

Tano, A. (2016). Etude d’une langue des signes émergente de Côte d’Ivoire: l’exemple de la Langue des Signes de Bouakako (LaSiBo). PhD thesis, Leiden University. Utrecht: LOT.

Ngambaye home signing : a preliminary analysis of the lexicon of three deaf children and their hearing families in N’Djamena, Chad

Yves Beosso Christian Mission for the Deaf Aledo Texas USA

A long decade ago before the invasion of French colonization in Chad in the 1960’s no school for the deaf existed in the country. How the deaf children and youths lived and what sort of linguistic used? This is a research question. Until later in 1976 when Late Dr. Andrew Foster, happened to be the first Afro- American missionary who pioneered gospel work among the deaf through education in Chad. Dr. Foster was the first to bring sign language the ASL (American Sign Language) to the deaf in Chad. Hence before his arrival, the deaf Chadian used homemade sign language to communicate among themselves and their families’ members.

In Chad there are more than 200 spoken dialects among which my dialect is called Ngambaye this is my first mother tongue language. Ngambaye is spoken widely in southern central of Chad, the language is popularly spoken among many others different dialects patriots as a result of it strong social, political, cultural and religious influence in the country.

Allah Bienvenu ( Allah in Ngambaye means God) and Bienvenue is French meaning Welcome) is one of our primary 6 pupils he is 11 years of age born deaf in a family living about 7 km away from our school site. It is interesting to watch how some members of his families communicate with him through homemade sign language.

CHSL is widely used among families and their deaf children at homes, in Churches and markets places and even in schools for the deaf among deaf pupils themselves, it is very easy to use in communicating with a deaf children and adult who has never been to school. CHSL has no grammatical principle the study is still going on in order to develop. Deaf children feel free to use without learning this mean this language is a pure sign language they have been born with.

A study on cross-lexical activation of American Sign Language and English in young deaf signers: Deaf children connect English print words with ASL signs in a monolingual semantic judgement task

Erin Wilkinson1,4, Agnes K. Villwock2,4, BriAnne Amador1,

Pilar Piñar3,4, & Jill P. Morford1,4, 1University of New Mexico, 2Universität Hamburg, 3Gallaudet University, 4NSF Science of Learning Center in Visual Language & Visual Learning (VL2)

Studies on hearing bilinguals have consistently revealed that during lexical processing, the non-target language is not fully inhibited, but rather simultaneously activated with the target language (Dijkstra & Van Heuven, 2002; Marian & Spivey, 2003; Van Hell & Dijkstra, 2002). Previous research on this phenomenon of cross-language activation has shown that – despite the fact that signed and spoken languages do not share the same modality – deaf and hearing bilingual adults activate signs when reading printed words in a monolingual semantic judgment task (Morford et al., 2011; 2014; Kubus et al., 2015). However, to date, the time-point in development at which signers experience cross-language activation of a signed and a spoken language remains unknown. In order to address this gap in the literature, we investigated the processing of written words in deaf junior high school students who were bilingual in American Sign Language (ASL) and English. Following the implicit priming paradigm by Morford et al. (2011; adapted from Thierry & Wu, 2007), participants completed a monolingual English semantic judgment task. Half of the English word pairs had phonologically related translation equivalents in ASL (dumb–stupid), whereas half had unrelated translation equivalents (simple–easy). Phonologically related translation equivalents shared two of three phonological parameters (handshape, location and/or movement). The experimental group consisted of 39 deaf ASL-English bilingual children (age range = 11–15 years; grade level range = Grade 5-8). As a control group, we tested 26 hearing English monolinguals (age range = 11–14 years).

Two hypotheses were investigated:

- Hypothesis 1: Deaf bilingual junior high school students already have connections between signs and print words (Hermans et al. 2008; Ormel et al. 2012), and will show an effect of ASL phonology in their response times during a monolingual English semantic judgment task.

- Hypothesis 2: Deaf bilingual junior high school students have not yet developed connections between signs and print words – hence, our participants will not show any effect of ASL co-activation on a monolingual English semantic judgment task.

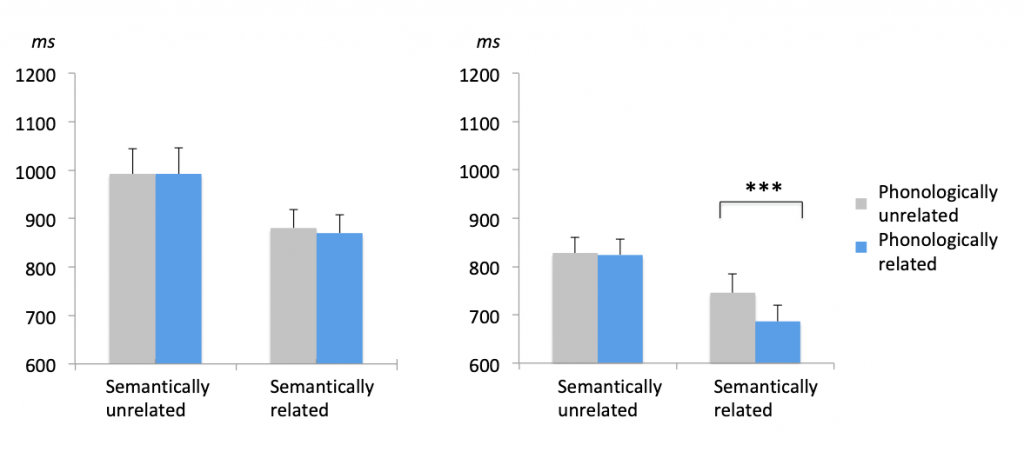

Responses were analyzed for semantically related and unrelated pairs separately using mixed effects linear regression with fixed effects of group and phonology, and random effects of participants and items. Results revealed that the deaf group, but not the hearing controls, displayed an effect of cross-language activation in the response time data. More precisely, the deaf children showed a facilitation effect – that is, they responded significantly faster to two semantically related English words that had phonologically related ASL translation equivalents than to semantically related words with phonologically unrelated ASL translations (p < 0.001; see Figure 1). Furthermore, in all conditions, the response times of deaf children were significantly shorter than those of hearing controls (p < 0.001). Importantly, the groups did not differ in accuracy scores (p > 0.1).

Therefore, our findings support Hypothesis 1: Deaf junior high school students who are learning ASL and English seem to already have developed connections between signs and printed words. In sum, the present study provides evidence that being bilingual in two languages, which differ in modality, does not cause confusion in deaf children. On the contrary, despite more limited exposure to English print and restricted access to English phonology relative to their hearing peers, deaf bilingual junior high school students display an advantage in processing the semantics of written English words.

Hearing group Deaf group

Figure 1. Response times to semantically unrelated and related English word pairs with and without phonologically unrelated and related ASL translations for hearing controls (left) and deaf junior high school students (right). Deaf children show a facilitation effect in the semantically related/phonologically related condition.

References:

Dijkstra, A., & Van Heuven, W. J. B. (2002). The architecture of the bilingual word recognition system: From identification to decision. Bilingualism: Language and Cognition, 5, 175-197.

Kubuş, O., Villwock, A., Morford, J. P., & Rathmann, C. (2015). Word recognition in deaf readers: Cross-language activation of German Sign Language and German. Applied Psycholinguistics, 36, 831–854.

Marian, V., & Spivey, M. J. (2003). Competing activation in bilingual language processing: Within- and between-language competition. Bilingualism: Language and Cognition, 6, 97-115.

Morford, J. P., Kroll, J. F., Piñar, P., & Wilkinson, E. (2014). Bilingual word recognition in deaf and hearing signers: Effects of proficiency and language dominance on cross-language activation. Second Language Research, 30, 251–271

Morford, J. P., Wilkinson, E., Villwock, A., Piñar, P. & Kroll, J. F. (2011). When deaf signers read English: Do written words activate their sign translations? Cognition, 118, 286–92.

Ormel, E., Hermans, D., Knoors, H. & Verhoeven, L. (2012). Cross-Language effects in visual word recognition: The case of bilingual deaf children. Bilingualism: Language and Cognition, 15 (2), 288–303.

Thierry, G., & Wu, Y. J. (2007). Brain potentials reveal unconscious translation during foreign language comprehension. Proceeding of National Academy of Sciences, 104, 12530-12535.

Van Hell, J. G. & Dijkstra, A. (2002). Foreign language knowledge can influence native language performance in exclusively native contexts. Psychonomic Bulletin & Review, 9, 780–789.

Deaf handicraft exhibit during the conference

Media publications

12-08-2019 : Conduct adequate research on hearing impairment

12-08-2019 : First ever summer school in Africa for and by deaf academics

xx-08-2019 : A special moment with the hearing impaired